The UCSB Center for Research in Electronic Art Technology (CREATE) was established in 1986, and is situated within the Department of Music, as well as having strong ties to the Media Art and Technology program and the AlloSphere research facility. CREATE serves as a productive environment available to students, researchers, and media artists for the realization of music and multimedia works. We present several concerts per year of electroacoustic music. Courses are offered at the undergraduate and graduate levels in collaboration with several departments. The center also serves as a laboratory for research and development of a new generation of software and hardware tools to aid in media-based composition. CREATE is committed to maintaining the highest possible level of artistic and technological capability. Professional composers will find the center a productive place to realize their works. Among those who have made use of our facilities are Iannis Xenakis, Thea Musgrave, Bebe Barron, Zbigniew Karkowski and Robert Morris.

News

The Center for Research in Electronic Art Technology (CREATE) presents a concert at Lotte Lehmann Concert Hall. Friday, April 19th, 2024 at 7:30pm.

The concert features works by Earl Howard (performing live on synthesizer and saxophone), Paris-based composer Horacio Vaggione, the late Corwin Chair Clarence Barlow, UCSB Music Composition graduate student Dariush Derekshani, and CREATE’s Associate Director Curtis Roads.

Event Details (PDF):

This event is free admission and open to the public.

Sound Synthesis by Computer: Models for the Interaction of the Player with Strings, a lecture by professor Gianpaolo Evangelista. Thursday, October 19th, 2023 at 5pm. Room 387-1015 (next to the Music Building parking lot).

Models for the interaction of the player with the instrument are fundamental to the accurate synthesis of sound based on physically inspired models. Depending on the musical instrument, the palette of possible interactions is generally very broad and includes the coupling of body parts, mechanical objects and/or devices with various components of the instrument. In this talk we focus on the interaction of the player with strings, whose simulation requires accurate models of the fingers, dynamic models of the bow, of the plectrum and of the friction of objects such as bottle necks. We also consider collisions and imperfect pressure on the fingerboard as important side effects and playing styles. Our models do not depend on the specific numerical implementation but are simply illustrated in the digital waveguide scheme.

Gianpaolo Evangelista is professor of Music Informatics at the University of Music and Performing Arts Vienna, Austria. Previously he was professor of Sound Technology at Linköping University, Sweden, researcher and assistant professor at the University “Federico II” of Naples, Italy and adjunct professor at the Polytechnic of Lausanne (EPFL), Switzerland. He received the Laurea in Physics from the University “Federico II” of Naples and the Master and PhD in Electrical and Computing Engineering from the University of California Irvine. He has collaborated with several musicians among including Iannis Xenakis (Paris) and Curtis Roads. His interests are in all applications of Signal Processing, Physics and Mathematics to Sound and Music, particularly for the analysis, synthesis, special effects and the separation of sound sources.

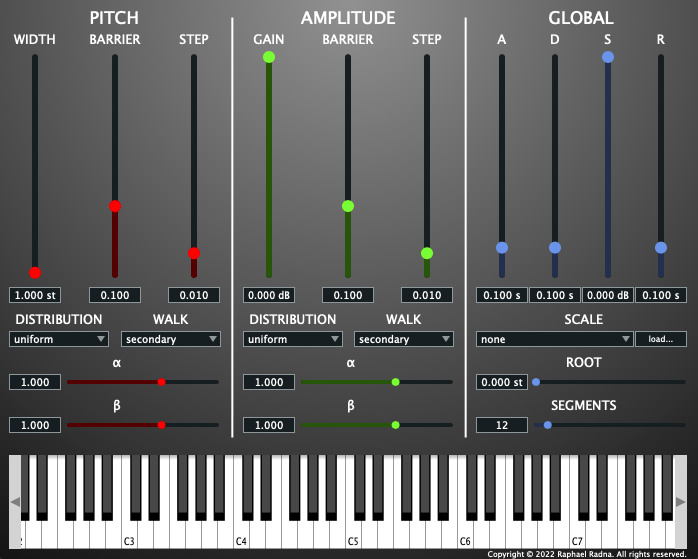

Xenos, a new virtual instrument plug-in that implements and extends the Dynamic Stochastic Synthesis (DSS) algorithm, has been released. Developed by MAT alumni Raphael Radna as part of his Masters degree, Xenos implements DSS with modifications and extensions that enhance its suitability for general composition.

Released in March 2023, Xenos is a virtual instrument plug-in that implements and extends the Dynamic Stochastic Synthesis (DSS) algorithm invented by Iannis Xenakis and notably employed in the 1991 composition GENDY3. DSS produces a wave of variable periodicity through regular stochastic variation of its wave cycle, resulting in emergent pitch and timbral features. While high-level parametric control of the algorithm enables a variety of musical behaviors, composing with DSS is difficult because its parameters lack basis in perceptual qualities.

Xenos thus implements DSS with modifications and extensions that enhance its suitability for general composition. Written in C++ using the JUCE framework, Xenos offers DSS in a convenient, efficient, and widely compatible polyphonic synthesizer that facilitates composition and performance through host-software features, including MIDI input and parameter automation. Xenos also introduces a pitch-quantization feature that tunes each period of the wave to the nearest frequency in an arbitrary scale. Custom scales can be loaded via the Scala tuning standard, enabling both xenharmonic composition at the mesostructural level and investigation of the timbral effects of microtonal pitch sets on the microsound timescale.

A good review of Xenos can be found at Music Radar: www.musicradar.com/news/fantastic-free-synths-xenos.

Xenos GitHub page: github.com/raphaelradna/xenos.

There is also an introductory YouTube video:

Raphael completed his Masters degree from Media Arts and Technology in the Fall of 2022, and is currently pursuing a PhD in Music Composition at UCSB.

The Department of Music, in association with the Center for Research in Electronic Art Technology, has released Space Control, a new software application for sound spatialization.

Space Control is a multitrack workstation dedicated to the design, realization, and mixture of spatial gestures for electroacoustic music composition. With its simple interface and minimal learning curve, it makes quick and powerful spatialization available to users of all experience levels.

Released in June 2022, Space Control was created by the team of Professor João Pedro Oliveira, acting as project manager, and software developer Raphael Radna. Radna is a PhD candidate in Music Composition at UC Santa Barbara, and is also pursuing a Masters of Science degree from the Media Arts and Technology Graduate Program at UCSB.

Space Control runs on Apple Computers, and is available on GitHub. Use this link for direct access to the software download.

There is also a Quick Start video available on YouTube:

Space Control inferface:

The project was supported by a Faculty Research Grant from the UCSB Academic Senate.

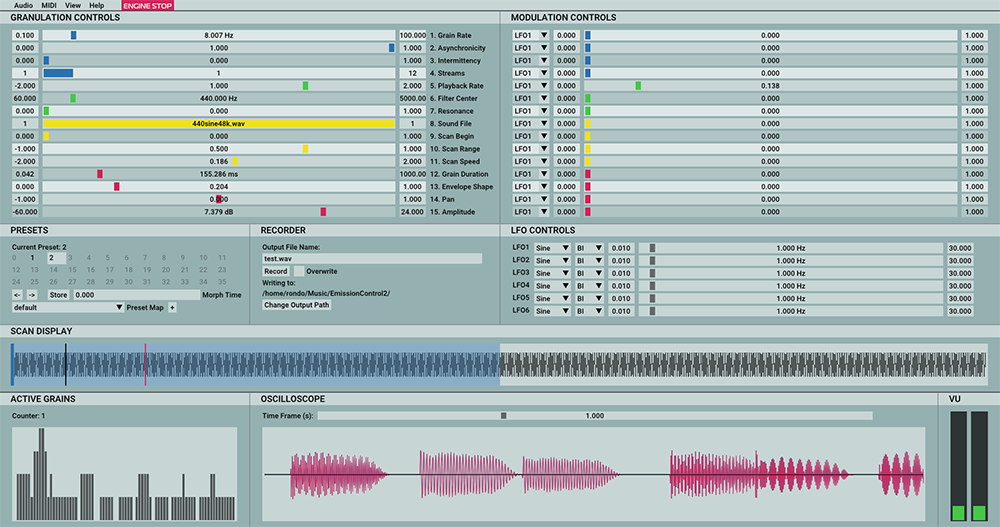

The Center for Research in Electronic Art Technology has released a new software app for sound granulation: EmissionControl2 for MacOSX, Linux, and Windows.

EmissionControl2 is a granular sound synthesizer. The theory of granular synthesis is described in the book Microsound (Curtis Roads, 2001, MIT Press).

Released in October 2020, the new app was developed by a team consisting of Professor Curtis Roads acting as project manager, with software developers Jack Kilgore and Rodney Duplessis. Kilgore is a computer science major at UCSB. Duplessis is a PhD student in music composition at UCSB and is also pursuing an MS degree in Media Arts and Technology.

EmissionControl2 is free and open-source software available on github.

The project was supported by a Faculty Research Grant from the UCSB Academic Senate.